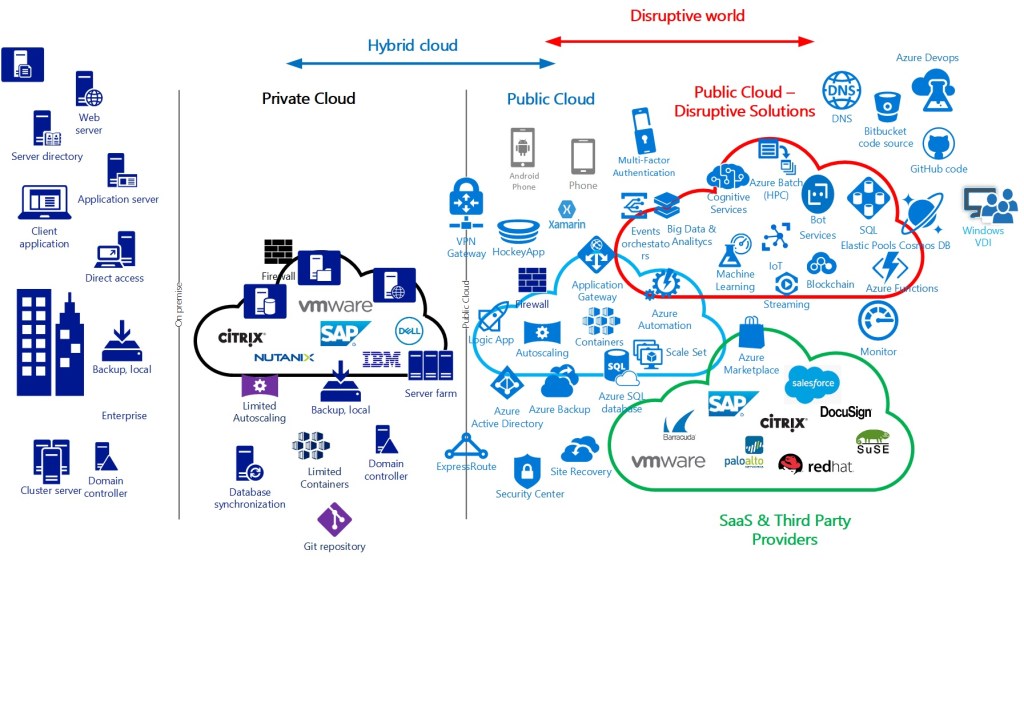

AWS (Amazon) , Azure (Microsoft) and GCP (Google) hyper-scale data centers are increasing their number during the last years in many regions supported by millions of investment in submarine cables to reduce latency. Southern Europe in not an exception. We can just take a look to Italy, Spain and France to realize what it´s happening.

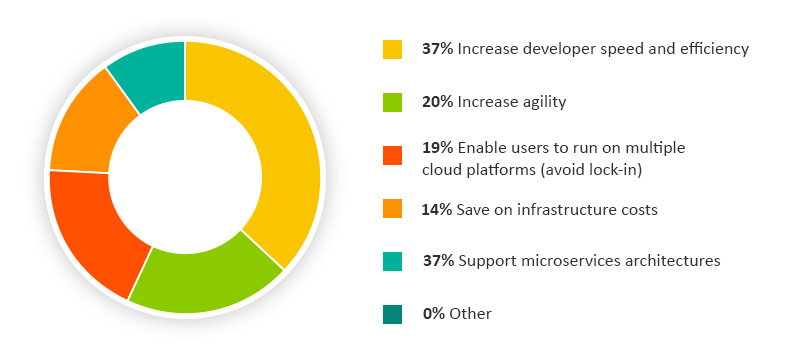

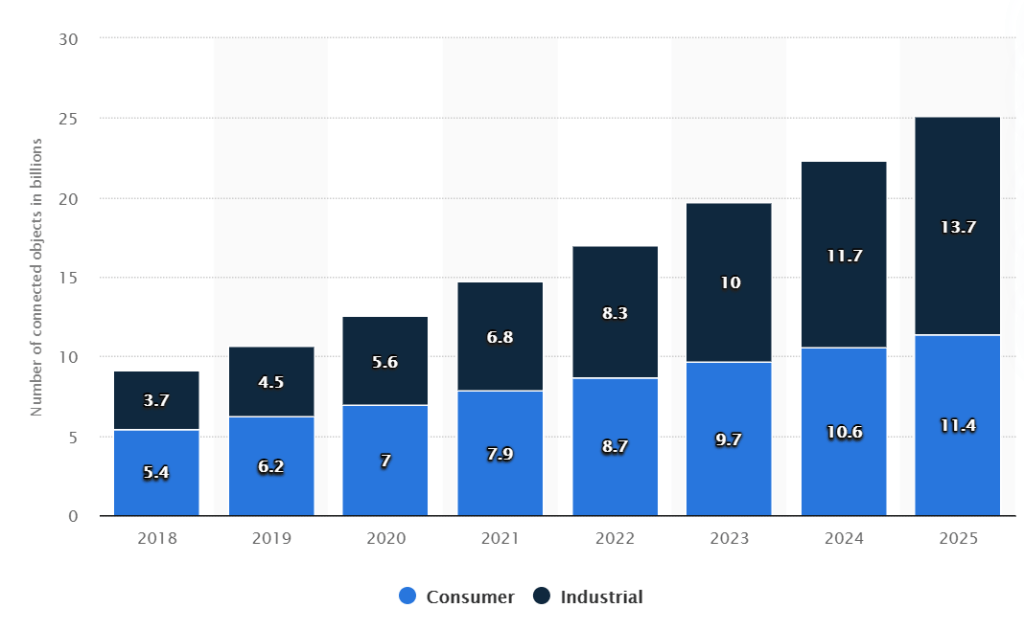

Public cloud providers know many customers will move massively thousand of services in the coming years. The process just started some years ago. But due to the pandemic and the need to provide remote services, to analyse data quicker and with efficiency, the big expansion on sensors to measure almost all in our lives or a global market to beat the competitors in any continent with innovation, accelerates even more.

There are 7 Rs to take the right decision so the CIOs and CTOs know what make sense to move or not to the cloud. What is a priority and moreover the impact and effort to transform their business.

Move to the cloud with a clear perspective on outcomes and goals to achieve will be able to bring value to our customers if you evaluate with care each of your IT services so you can take decisions according with your business alignment. Some Applications could be retire other would enter in a cycle of modernization, other just resize to reduce cost and improve resilience..

Let´s explain our 7 Rs from simple to complex scenarios:

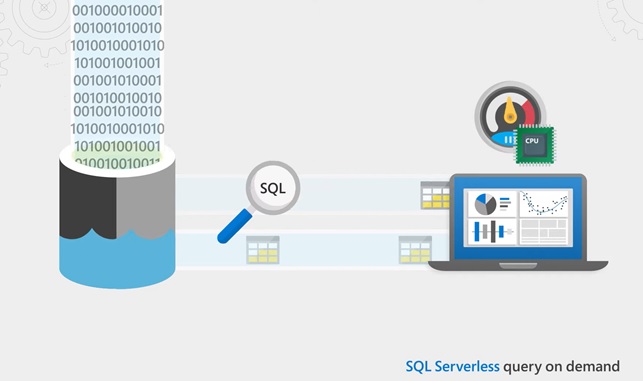

Retire. Some applications are not used any more. Just a couple of users need to do some queries from time to time. Hence maybe it´s better to move that old data to a data warehouse and retire the old application.

Retain. It means literally “do nothing at all”. May be this application use some API or backend from an on premise solution with some compliance limitations. May be it was recently upgraded and you want to amortize the investment for a while.

Repurchase. Here you have the opportunity to change the IT solution. Let´s say you are not happy with your firewall on premise and maybe you think it´s better to change to a different provider with a better firewall adoption for AWS or Azure, even to move from IaaS to SaaS some applications.

Relocate. For example, relocate the ESX hypervisor hosting your database and Web Services to VMware Cloud on AWS / Azure / GPC or move your legacy Citrix server with Windows 2008 R2 to a dedicated host on AWS.

Rehost. It means lift/shift. Move some VMs with clear dependence between them to the cloud just to provide better backup, cheaper replication on several regions and resize their compute consumption to reduce cost.

Replatform. Lift and optimize somehow your application. For instance, when you move your web services from a farm of VMs on Vmware with a HLB (Hardware Load Balancer) on premise to a external LB service on Azure with some APP Services where you can adopt the logic of your business and migrate your PhP or Java application. Therefore you don´t have to worry for Operating system patching or security at the Windows Server level anymore. Even eliminate the Windows operating license.

Refactor. The most complex scenario. You have a big monolithic database with lots of applications using that data, reading and writing heavily. You know, you need to move the applications and the monolithic database and modify its architecture by taking full advantage of cloud-native features to improve performance and scalability as well as to reduce risk. Any failure in a component provoke a general failure. Here you need to decouple your components and move to microservices sooner or later.

I hope you could understand better those strategies to move to the cloud your applications, so you can be laser focus on your needs and achieve the best approach for each of them.

To sum up use the right tools to evaluate your applications/ IT Services on premise and based on the 7Rs choose the suitable journey to the cloud for them..

Don´t forget to leverage all the potential of the CAF (Cloud Adoption Framework) https://wordpress.com/post/cloudvisioneers.com/287 that i´ve mentioned before in my blog together with the 7Rs strategy.

Enjoy the journey to the cloud with me…see you soon.